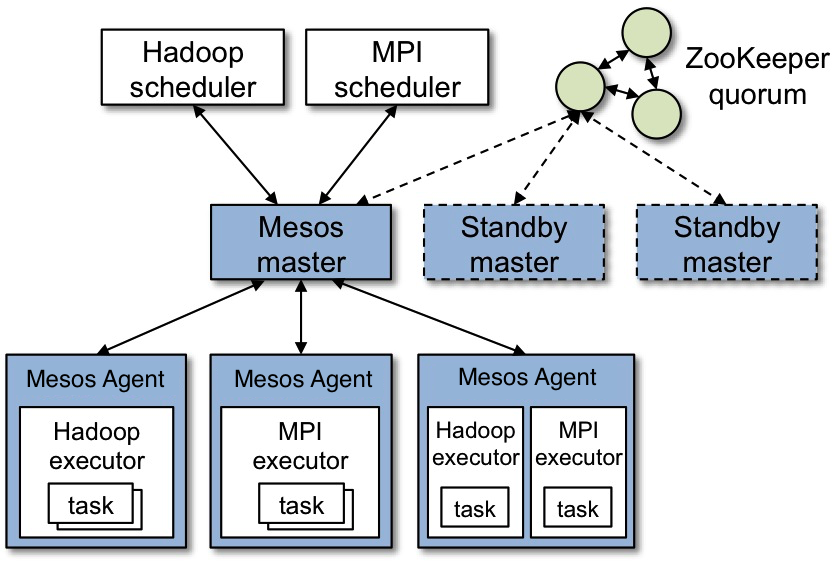

Mesos Architecture

The above figure shows the main components of Mesos. Mesos consists of a master daemon that manages agent daemons running on each cluster node, and Mesos frameworks that run tasks on these agents.

The master enables fine-grained sharing of resources (CPU, RAM, ...) across

frameworks by making them resource offers. Each resource offer contains a list

of <agent ID, resource1: amount1, resource2: amount2, ...> (NOTE: as

keyword 'slave' is deprecated in favor of 'agent', driver-based frameworks will

still receive offers with slave ID, whereas frameworks using the v1 HTTP API receive offers with agent ID). The master decides how many resources to offer to each framework according to a given organizational policy, such as fair sharing or strict priority. To support a diverse set of policies, the master employs a modular architecture that makes it easy to add new allocation modules via a plugin mechanism.

A framework running on top of Mesos consists of two components: a scheduler that registers with the master to be offered resources, and an executor process that is launched on agent nodes to run the framework's tasks (see the App/Framework development guide for more details about framework schedulers and executors). While the master determines how many resources are offered to each framework, the frameworks' schedulers select which of the offered resources to use. When a framework accepts offered resources, it passes to Mesos a description of the tasks it wants to run on them. In turn, Mesos launches the tasks on the corresponding agents.

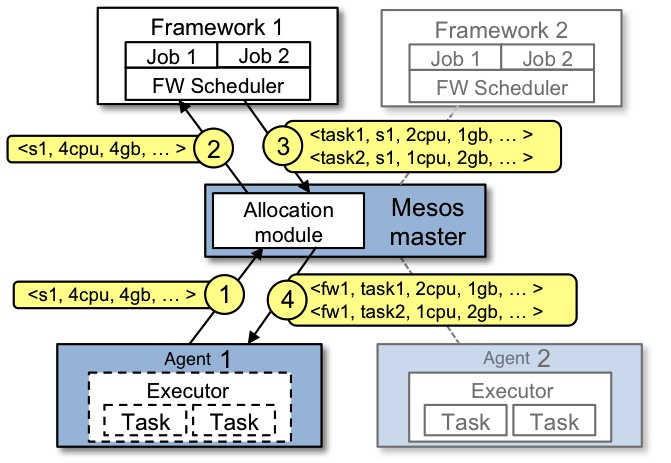

Example of resource offer

The figure below shows an example of how a framework gets scheduled to run a task.

Let's walk through the events in the figure.

- Agent 1 reports to the master that it has 4 CPUs and 4 GB of memory free. The master then invokes the allocation policy module, which tells it that framework 1 should be offered all available resources.

- The master sends a resource offer describing what is available on agent 1 to framework 1.

- The framework's scheduler replies to the master with information about two tasks to run on the agent, using <2 CPUs, 1 GB RAM> for the first task, and <1 CPUs, 2 GB RAM> for the second task.

- Finally, the master sends the tasks to the agent, which allocates appropriate resources to the framework's executor, which in turn launches the two tasks (depicted with dotted-line borders in the figure). Because 1 CPU and 1 GB of RAM are still unallocated, the allocation module may now offer them to framework 2.

In addition, this resource offer process repeats when tasks finish and new resources become free.

While the thin interface provided by Mesos allows it to scale and allows the frameworks to evolve independently, one question remains: how can the constraints of a framework be satisfied without Mesos knowing about these constraints? For example, how can a framework achieve data locality without Mesos knowing which nodes store the data required by the framework? Mesos answers these questions by simply giving frameworks the ability to reject offers. A framework will reject the offers that do not satisfy its constraints and accept the ones that do. In particular, we have found that a simple policy called delay scheduling, in which frameworks wait for a limited time to acquire nodes storing the input data, yields nearly optimal data locality.

You can also read much more about the Mesos architecture in this technical paper.

Video and Slides of Mesos Presentations

(Listed in reverse chronological order)

MesosCon North America 2018

Jolt: Running Distributed, Fault-Tolerant Tests at Scale using Mesos

Video Sunil Shah, Kyle Kelly, and Timmy Zhu Presented November 1, 2017 at Bay Area Mesos User Group Meetup

MesosCon Europe 2017

MesosCon North America 2017

MesosCon Asia 2017

MesosCon Asia 2016

MesosCon Europe 2016

MesosCon North America 2016

MesosCon Europe 2015

MesosCon North America 2015

Building and Deploying Applications to Apache Mesos

Slides Joe Stein Presented February 26, 2015 at DigitalOcean Community Meetup

MesosCon 2014

Datacenter Computing with Apache Mesos

Slides Paco Nathan Presented April 15, 2014 at Big Data DC Meetup

Apache Spark at Viadeo (Running on Mesos)

Video + Slides Eugen Cepoi Presented April 9, 2014 at Paris Hadoop User Group

Mesos, HubSpot, and Singularity

Video Tom Petr Presented April 3rd, 2014 at @TwitterOSS #conf

Building Distributed Frameworks on Mesos

Video Benjamin Hindman Presented March 25th, 2014 at Aurora and Mesos Frameworks Meetup

Introduction to Apache Aurora

Video Bill Farner Presented March 25th, 2014 at Aurora and Mesos Frameworks Meetup

Improving Resource Efficiency with Apache Mesos

Video Christina Delimitrou Presented April 3rd, 2014 at @TwitterOSS #conf

Apache Mesos as an SDK for Building Distributed Frameworks

Slides Paco Nathan Presented February 13th, 2014 at Strata

Run your Data Center like Google's with Apache Mesos

Video and Demo Abhishek Parolkar Presented November 14th, 2013 at Cloud Expo Asia 2013

Datacenter Management with Mesos

Video Benjamin Hindman Presented August 29th, 2013 at AMP Camp

Building a Framework on Mesos: A Case Study with Jenkins

Video Vinod Kone Presented July 25, 2013 at SF Mesos Meetup

Hadoop on Mesos

Video Brenden Matthews Presented July 25, 2013 at SF Mesos Meetup

Introduction to Apache Mesos

Slides Benjamin Hindman Presented August 20, 2013 at NYC Mesos Meetup

Chronos: A Distributed, Fault-Tolerant and Highly Available Job Orchestration Framework for Mesos

Slides Florian Leibert Presented August 20, 2013 at NYC Mesos Meetup

Airbnb Tech Talk

Video Benjamin Hindman Presented September 6, 2012 at Airbnb

Managing Twitter Clusters with Mesos

Video Benjamin Hindman Presented August 22, 2012 at AMP Camp

Mesos: A Platform for Fine-Grained Resource Sharing in Datacenters

Video Matei Zaharia Presented March 2011 at UC Berkeley

Mesos: Efficiently Sharing the Datacenter

Video Benjamin Hindman Presented November 8, 2010 at LinkedIn

Mesos: A Resource Management Platform for Hadoop and Big Data Clusters

Video Matei Zaharia Presented Summer 2010 at Yahoo

Apache Mesos - Paid Training

Automated Machine Learning Pipeline with Mesos

Video Karl Whitford Packt (November 2017)

Docker, Apache Mesos & DCOS: Run and manage cloud datacenter (Video)

Manuj Aggarwal Packt (January 2018)

Mesos Release and Support policy

The Mesos versioning and release policy gives operators and developers clear guidelines on:

- Making modifications to the existing APIs without affecting backward compatibility.

- How long a Mesos API will be supported.

- Upgrading a Mesos installation across release versions.

This document describes the release strategy for Mesos post 1.0.0 release.

Release Schedule

Mesos releases are time-based, though we do make limited adjustments to the release schedule to accommodate feature development. This gives users and developers a predictable cadence to consume and produce features, while ensuring that each release can include the developments that users are waiting for.

If a feature is not ready by the time a release is cut, that feature should be disabled. This means that features should be developed in such a way that they are opt-in by default and can be easily disabled (e.g., flag).

A new Mesos release is cut approximately every 3 months. The versioning scheme is SemVer. Typically, the minor release version is incremented by 1 (e.g., 1.1, 1.2, 1.3 etc) for every release, unless it is a major release.

Every (minor) release is a stable release and recommended for production use. This means a release candidate will go through rigorous testing (unit tests, integration tests, benchmark tests, cluster tests, scalability, etc.) before being officially released. In the rare case that a regular release is not deemed stable, a patch release will be released that will stabilize it.

At any given time, 3 releases are supported: the latest release and the two prior. Support means fixing of critical issues that affect the release. Once an issue is deemed critical, it will be fixed in only those affected releases that are still supported. This is called a patch release and increments the patch version by 1 (e.g., 1.2.1). Once a release reaches End Of Life (i.e., support period has ended), no more patch releases will be made for that release. Note that this is not related to backwards compatibility guarantees and deprecation periods (discussed later).

Which issues are considered critical?

- Security fixes

- Compatibility regressions

- Functional regressions

- Performance regressions

- Fixes for 3rd party integration (e.g., Docker remote API)

Whether an issue is considered critical or not is sometimes subjective. In some cases it is obvious and sometimes it is fuzzy. Users should work with committers to figure out the criticality of an issue and get agreement and commitment for support.

Patch releases are normally done once per month.

If a particular issue is affecting a user and the user cannot wait until the next scheduled patch release, they can request an off-schedule patch release for a specific supported version. This should be done by sending an email to the dev list.

Upgrades

All stable releases will be loosely compatible. Loose compatibility means:

- Master or agent can be upgraded to a new release version as long as they or the ecosystem components (scheduler, executor, zookeeper, service discovery layer, monitoring etc) do not depend on deprecated features (e.g., deprecated flags, deprecated metrics).

- There should be no unexpected effect on externally visible behavior that is not deprecated. See API compatibility section for what should be expected for Mesos APIs.

NOTE: The compatibility guarantees do not apply to modules yet. See Modules section below for details.

This means users should be able to upgrade (as long as they are not depending on deprecated / removed features) Mesos master or agent from a stable release version N directly to another stable release version M without having to go through intermediate release versions. For the purposes of upgrades, a stable release means the release with the latest patch version. For example, among 1.2.0, 1.2.1, 1.3.0, 1.4.0, 1.4.1 releases 1.2.1, 1.3.0 and 1.4.1 are considered stable and so a user should be able to upgrade from 1.2.1 directly to 1.4.1. Look at the API compatability section below for how frameworks can do seamless upgrades.

The deprecation period for any given feature will be 6 months. Having a set period allows Mesos developers to not indefinitely accrue technical debt and allows users time to plan for upgrades.

The detailed information about upgrading to a particular Mesos version would be posted here.

API versioning

The Mesos APIs (constituting Scheduler, Executor, Internal, Operator/Admin APIs) will have a version in the URL. The versioned URL will have a prefix of /api/vN where "N" is the version of the API. The "/api" prefix is chosen to distinguish API resources from Web UI paths.

Examples:

- http://localhost:5050/api/v1/scheduler : Scheduler HTTP API hosted by the master.

- http://localhost:5051/api/v1/executor : Executor HTTP API hosted by the agent.

A given Mesos installation might host multiple versions of the same API i.e., Scheduler API v1 and/or v2 etc.

API version vs Release version

- To keep things simple, the stable version of the API will correspond to the major release version of Mesos.

- For example, v1 of the API will be supported by Mesos release versions 1.0.0, 1.4.0, 1.20.0 etc.

- vN version of the API might also be supported by release versions of N-1 series but the vN API is not considered stable until the last release version of N-1 series.

- For example, v2 of the API might be introduced in Mesos 1.12.0 release but it is only considered stable in Mesos 1.21.0 release if it is the last release of "1" series. Note that all Mesos 1.x.y versions will still support v1 of the API.

- The API version is only bumped if we need to make a backwards incompatible API change. We will strive to support a given API version for at least a year.

- The deprecation clock for vN-1 API will start as soon as we release "N.0.0" version of Mesos. We will strive to give enough time (e.g., 6 months) for frameworks/operators to upgrade to vN API before we stop supporting vN-1 API.

API Compatibility

The API compatibility is determined by the corresponding protobuf guarantees.

As an example, the following are considered "backwards compatible" changes for Scheduler API:

- Adding new types of Calls i.e., new types of HTTP requests to "/scheduler".

- Adding new optional fields to existing requests to "/scheduler".

- Adding new types of Events i.e., new types of chunks streamed on "/scheduler".

- Adding new header fields to chunked response streamed on "/scheduler".

- Adding new fields (or changing the order of fields) to chunks' body streamed on "/scheduler".

- Adding new API resources (e.g., "/foobar").

The following are considered backwards incompatible changes for Scheduler API:

- Adding new required fields to existing requests to "/scheduler".

- Renaming/removing fields from existing requests to "/scheduler".

- Renaming/removing fields from chunks streamed on "/scheduler".

- Renaming/removing existing Calls.

Implementation Details

Release branches

For regular releases, the work is done on the master branch. There are no feature branches but there will be release branches.

When it is time to cut a minor release, a new branch (e.g., 1.2.x) is created off the master branch. We chose 'x' instead of patch release number to disambiguate branch names from tag names. Then the first RC (-rc1) is tagged on the release branch. Subsequent RCs, in case the previous RCs fail testing, should be tagged on the release branch.

Patch releases are also based off the release branches. Typically the fix for an issue that is affecting supported releases lands on the master branch and is then backported to the release branch(es). In rare cases, the fix might directly go into a release branch without landing on master (e.g., fix / issue is not applicable to master).

Having a branch for each minor release reduces the amount of work a release manager needs to do when it is time to do a release. It is the responsibility of the committer of a fix to commit it to all the affecting release branches. This is important because the committer has more context about the issue / fix at the time of the commit than a release manager at the time of release. The release manager of a minor release will be responsible for all its patch releases as well. Just like the master branch, history rewrites are not allowed in the release branch (i.e., no git push --force).

API protobufs

Most APIs in Mesos accept protobuf messages with a corresponding JSON field mapping. To support multiple versions of the API, we decoupled the versioned protobufs backing the API from the "internal" protobufs used by the Mesos code.

For example, the protobufs for the v1 Scheduler API are located at:

include/mesos/v1/scheduler/scheduler.proto

package mesos.v1.scheduler;

option java_package = "org.apache.mesos.v1.scheduler";

option java_outer_classname = "Protos";

...

The corresponding internal protobufs for the Scheduler API are located at:

include/mesos/scheduler/scheduler.proto

package mesos.scheduler;

option java_package = "org.apache.mesos.scheduler";

option java_outer_classname = "Protos";

...

The users of the API send requests (and receive responses) based on the versioned protobufs. We implemented evolve/devolve converters that can convert protobufs from any supported version to the internal protobuf and vice versa.

Internally, message passing between various Mesos components would use the internal unversioned protobufs. When sending response (if any) back to the user of the API, the unversioned protobuf would be converted back to a versioned protobuf.

Building

Downloading Mesos

There are different ways you can get Mesos:

1. Download the latest stable release from Apache (Recommended)

$ wget https://downloads.apache.org/mesos/1.11.0/mesos-1.11.0.tar.gz

$ tar -zxf mesos-1.11.0.tar.gz

2. Clone the Mesos git repository (Advanced Users Only)

$ git clone https://gitbox.apache.org/repos/asf/mesos.git

NOTE: If you have problems running the above commands, you may need to first run through the System Requirements section below to install the wget, tar, and git utilities for your system.

System Requirements

Mesos runs on Linux (64 Bit) and Mac OS X (64 Bit). To build Mesos from source, GCC 4.8.1+ or Clang 3.5+ is required.

On Linux, a kernel version >= 2.6.28 is required at both build time and run time. For full support of process isolation under Linux a recent kernel >= 3.10 is required.

The Mesos agent also runs on Windows. To build Mesos from source, follow the instructions in the Windows section.

Make sure your hostname is resolvable via DNS or via /etc/hosts to allow full support of Docker's host-networking capabilities, needed for some of the Mesos tests. When in doubt, please validate that /etc/hosts contains your hostname.

Ubuntu 14.04

Following are the instructions for stock Ubuntu 14.04. If you are using a different OS, please install the packages accordingly.

# Update the packages.

$ sudo apt-get update

# Install a few utility tools.

$ sudo apt-get install -y tar wget git

# Install the latest OpenJDK.

$ sudo apt-get install -y openjdk-7-jdk

# Install autotools (Only necessary if building from git repository).

$ sudo apt-get install -y autoconf libtool

# Install other Mesos dependencies.

$ sudo apt-get -y install build-essential python-dev python-six python-virtualenv libcurl4-nss-dev libsasl2-dev libsasl2-modules maven libapr1-dev libsvn-dev

Ubuntu 16.04

Following are the instructions for stock Ubuntu 16.04. If you are using a different OS, please install the packages accordingly.

# Update the packages.

$ sudo apt-get update

# Install a few utility tools.

$ sudo apt-get install -y tar wget git

# Install the latest OpenJDK.

$ sudo apt-get install -y openjdk-8-jdk

# Install autotools (Only necessary if building from git repository).

$ sudo apt-get install -y autoconf libtool

# Install other Mesos dependencies.

$ sudo apt-get -y install build-essential python-dev python-six python-virtualenv libcurl4-nss-dev libsasl2-dev libsasl2-modules maven libapr1-dev libsvn-dev zlib1g-dev iputils-ping

Mac OS X 10.11 (El Capitan), macOS 10.12 (Sierra)

Following are the instructions for Mac OS X El Capitan. When building Mesos with the Apple-provided toolchain, the Command Line Tools from XCode >= 8.0 are required; XCode 8 requires Mac OS X 10.11.5 or newer.

# Install Python 3: https://www.python.org/downloads/

# Install Command Line Tools. The Command Line Tools from XCode >= 8.0 are required.

$ xcode-select --install

# Install Homebrew.

$ ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

# Install Java.

$ brew install Caskroom/cask/java

# Install libraries.

$ brew install wget git autoconf automake libtool subversion maven xz

# Install Python dependencies.

$ sudo easy_install pip

$ pip install virtualenv

When compiling on macOS 10.12, the following is needed:

# There is an incompatiblity with the system installed svn and apr headers.

# We need the svn and apr headers from a brew installation of subversion.

# You may need to unlink the existing version of subversion installed via

# brew in order to configure correctly.

$ brew unlink subversion # (If already installed)

$ brew install subversion

# When configuring, the svn and apr headers from brew will be automatically

# detected, so no need to explicitly point to them.

# If the build fails due to compiler warnings, `--disable-werror` can be passed

# to configure to not treat warnings as errors.

$ ../configure

# Lastly, you may encounter the following error when the libprocess tests run:

$ ./libprocess-tests

Failed to obtain the IP address for '<hostname>'; the DNS service may not be able to resolve it: nodename nor servname provided, or not known

# If so, turn on 'Remote Login' within System Preferences > Sharing to resolve the issue.

NOTE: When upgrading from Yosemite to El Capitan, make sure to rerun xcode-select --install after the upgrade.

CentOS 6.6

Following are the instructions for stock CentOS 6.6. If you are using a different OS, please install the packages accordingly.

# Install a recent kernel for full support of process isolation.

$ sudo rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

$ sudo rpm -Uvh http://www.elrepo.org/elrepo-release-6-6.el6.elrepo.noarch.rpm

$ sudo yum --enablerepo=elrepo-kernel install -y kernel-lt

# Make the just installed kernel the one booted by default, and reboot.

$ sudo sed -i 's/default=1/default=0/g' /boot/grub/grub.conf

$ sudo reboot

# Install a few utility tools. This also forces an update of `nss`,

# which is necessary for the Java bindings to build properly.

$ sudo yum install -y tar wget git which nss

# 'Mesos > 0.21.0' requires a C++ compiler with full C++11 support,

# (e.g. GCC > 4.8) which is available via 'devtoolset-2'.

# Fetch the Scientific Linux CERN devtoolset repo file.

$ sudo wget -O /etc/yum.repos.d/slc6-devtoolset.repo http://linuxsoft.cern.ch/cern/devtoolset/slc6-devtoolset.repo

# Import the CERN GPG key.

$ sudo rpm --import http://linuxsoft.cern.ch/cern/centos/7/os/x86_64/RPM-GPG-KEY-cern

# Fetch the Apache Maven repo file.

$ sudo wget http://repos.fedorapeople.org/repos/dchen/apache-maven/epel-apache-maven.repo -O /etc/yum.repos.d/epel-apache-maven.repo

# 'Mesos > 0.21.0' requires 'subversion > 1.8' devel package, which is

# not available in the default repositories.

# Create a WANdisco SVN repo file to install the correct version:

$ sudo bash -c 'cat > /etc/yum.repos.d/wandisco-svn.repo <<EOF

[WANdiscoSVN]

name=WANdisco SVN Repo 1.8

enabled=1

baseurl=http://opensource.wandisco.com/centos/6/svn-1.8/RPMS/$basearch/

gpgcheck=1

gpgkey=http://opensource.wandisco.com/RPM-GPG-KEY-WANdisco

EOF'

# Install essential development tools.

$ sudo yum groupinstall -y "Development Tools"

# Install 'devtoolset-2-toolchain' which includes GCC 4.8.2 and related packages.

# Installing 'devtoolset-3' might be a better choice since `perf` might

# conflict with the version of `elfutils` included in devtoolset-2.

$ sudo yum install -y devtoolset-2-toolchain

# Install other Mesos dependencies.

$ sudo yum install -y apache-maven python-devel python-six python-virtualenv java-1.7.0-openjdk-devel zlib-devel libcurl-devel openssl-devel cyrus-sasl-devel cyrus-sasl-md5 apr-devel subversion-devel apr-util-devel

# Enter a shell with 'devtoolset-2' enabled.

$ scl enable devtoolset-2 bash

$ g++ --version # Make sure you've got GCC > 4.8!

# Process isolation is using cgroups that are managed by 'cgconfig'.

# The 'cgconfig' service is not started by default on CentOS 6.6.

# Also the default configuration does not attach the 'perf_event' subsystem.

# To do this, add 'perf_event = /cgroup/perf_event;' to the entries in '/etc/cgconfig.conf'.

$ sudo yum install -y libcgroup

$ sudo service cgconfig start

CentOS 7.1

Following are the instructions for stock CentOS 7.1. If you are using a different OS, please install the packages accordingly.

# Install a few utility tools

$ sudo yum install -y tar wget git

# Fetch the Apache Maven repo file.

$ sudo wget http://repos.fedorapeople.org/repos/dchen/apache-maven/epel-apache-maven.repo -O /etc/yum.repos.d/epel-apache-maven.repo

# Install the EPEL repo so that we can pull in 'libserf-1' as part of our

# subversion install below.

$ sudo yum install -y epel-release

# 'Mesos > 0.21.0' requires 'subversion > 1.8' devel package,

# which is not available in the default repositories.

# Create a WANdisco SVN repo file to install the correct version:

$ sudo bash -c 'cat > /etc/yum.repos.d/wandisco-svn.repo <<EOF

[WANdiscoSVN]

name=WANdisco SVN Repo 1.9

enabled=1

baseurl=http://opensource.wandisco.com/centos/7/svn-1.9/RPMS/\$basearch/

gpgcheck=1

gpgkey=http://opensource.wandisco.com/RPM-GPG-KEY-WANdisco

EOF'

# Parts of Mesos require systemd in order to operate. However, Mesos

# only supports versions of systemd that contain the 'Delegate' flag.

# This flag was first introduced in 'systemd version 218', which is

# lower than the default version installed by centos. Luckily, centos

# 7.1 has a patched 'systemd < 218' that contains the 'Delegate' flag.

# Explicity update systemd to this patched version.

$ sudo yum update systemd

# Install essential development tools.

$ sudo yum groupinstall -y "Development Tools"

# Install other Mesos dependencies.

$ sudo yum install -y apache-maven python-devel python-six python-virtualenv java-1.8.0-openjdk-devel zlib-devel libcurl-devel openssl-devel cyrus-sasl-devel cyrus-sasl-md5 apr-devel subversion-devel apr-util-devel

Windows

Follow the instructions in the Windows section.

Building Mesos (Posix)

# Change working directory.

$ cd mesos

# Bootstrap (Only required if building from git repository).

$ ./bootstrap

# Configure and build.

$ mkdir build

$ cd build

$ ../configure

$ make

In order to speed up the build and reduce verbosity of the logs, you can append -j <number of cores> V=0 to make.

# Run test suite.

$ make check

# Install (Optional).

$ make install

Examples

Mesos comes bundled with example frameworks written in C++, Java and Python.

The framework binaries will only be available after running make check, as

described in the Building Mesos section above.

# Change into build directory.

$ cd build

# Start Mesos master (ensure work directory exists and has proper permissions).

$ ./bin/mesos-master.sh --ip=127.0.0.1 --work_dir=/var/lib/mesos

# Start Mesos agent (ensure work directory exists and has proper permissions).

$ ./bin/mesos-agent.sh --master=127.0.0.1:5050 --work_dir=/var/lib/mesos

# Visit the Mesos web page.

$ http://127.0.0.1:5050

# Run C++ framework (exits after successfully running some tasks).

$ ./src/test-framework --master=127.0.0.1:5050

# Run Java framework (exits after successfully running some tasks).

$ ./src/examples/java/test-framework 127.0.0.1:5050

# Run Python framework (exits after successfully running some tasks).

$ ./src/examples/python/test-framework 127.0.0.1:5050

Note: These examples assume you are running Mesos on your local machine. Following them will not allow you to access the Mesos web page in a production environment (e.g. on AWS). For that you will need to specify the actual IP of your host when launching the Mesos master and ensure your firewall settings allow access to port 5050 from the outside world.

Binary Packages

Downloading the Mesos RPM

Download and install the latest stable CentOS7 RPM binary from the Repository:

$ cat > /tmp/aventer.repo <<EOF

#aventer-mesos-el - packages by mesos from aventer

[aventer-rel]

name=AVENTER stable repository $releasever

baseurl=http://rpm.aventer.biz/CentOS/$releasever/$basearch/

enabled=1

gpgkey=https://www.aventer.biz/CentOS/support_aventer.asc

EOF

$ sudo mv /tmp/aventer.repo /etc/yum.repos.d/aventer.repo

$ sudo yum update

$ sudo yum install mesos

The above instructions show how to install the latest version of Mesos for RHEL 7.

Substitute baseurl the with the appropriate URL for your operating system.

Start Mesos Master and Agent.

The RPM installation creates the directory /var/lib/mesos that can be used as a work directory.

Start the Mesos master with the following command:

$ mesos-master --work_dir=/var/lib/mesos

On a different terminal, start the Mesos agent, and associate it with the Mesos master started above:

$ mesos-agent --work_dir=/var/lib/mesos --master=127.0.0.1:5050

This is the simplest way to try out Mesos after downloading the RPM. For more complex and production setup instructions refer to the Administration section of the docs.

Mesos Runtime Configuration

The Mesos master and agent can take a variety of configuration options

through command-line arguments or environment variables. A list of the

available options can be seen by running mesos-master --help or

mesos-agent --help. Each option can be set in two ways:

-

By passing it to the binary using

--option_name=value, either specifying the value directly, or specifying a file in which the value resides (--option_name=file://path/to/file). The path can be absolute or relative to the current working directory. -

By setting the environment variable

MESOS_OPTION_NAME(the option name with aMESOS_prefix added to it).

Configuration values are searched for first in the environment, then on the command-line.

Additionally, this documentation lists only a recent snapshot of the options in

Mesos. A definitive source for which flags your version of Mesos supports can be

found by running the binary with the flag --help, for example mesos-master --help.

Master and Agent Options

These are options common to both the Mesos master and agent.

See configuration/master-and-agent.md.

Master Options

Agent Options

Libprocess Options

See configuration/libprocess.md.

Mesos Build Configuration

Autotools Options

If you have special compilation requirements, please refer to ./configure --help when configuring Mesos.

See configuration/autotools.md.

CMake Options

Install CMake 3.7+

Linux

Install the latest version of CMake from CMake.org. A self-extracting tarball is available to make this process painless.

Currently, few of the common Linux flavors package a sufficient CMake

version. Ubuntu versions 12.04 and 14.04 package CMake 2;

Ubuntu 16.04 packages CMake 3.5. If you already installed cmake from packages,

you may remove it via: apt-get purge cmake.

The standard CentOS package is CMake 2, and unfortunately even the cmake3

package in EPEL is only CMake 3.6, you may remove them via:

yum remove cmake cmake3.

Mac OS X

HomeBrew's CMake version is sufficient: brew install cmake.

Windows

Download and install the MSI from CMake.org.

NOTE: Windows needs CMake 3.8+, rather than 3.7+.

Quick Start

The most basic way to build with CMake, with no configuration, is fairly straightforward:

mkdir build

cd build

cmake ..

cmake --build .

The last step, cmake --build . can also take a --target command to build any

particular target (e.g. mesos-tests, or tests to build mesos-tests,

libprocess-tests, and stout-tests): cmake --build . --target tests. To

send arbitrary flags to the native build system underneath (e.g. make), append

the command with -- <flags to be passed>: cmake --build . -- -j4.

Also, cmake --build can be substituted by your build system of choice. For

instance, the default CMake generator on Linux produces GNU Makefiles, so after

configuring with cmake .., you can just run make tests in the build folder

like usual. Similarly, if you configure with -G Ninja to use the Ninja

generator, you can then run ninja tests to build the tests target with

Ninja.

Installable build

This example will build Mesos and install it into a custom prefix:

mkdir build && cd build

cmake -DCMAKE_INSTALL_PREFIX=/home/current_user/mesos

cmake --build . --target install

To additionally install mesos-tests executable and related test helpers

(this can be used to run Mesos tests against the installed binaries),

one can enable the MESOS_INSTALL_TESTS option.

To produce a set of binaries and libraries that will work after being

copied/moved to a different location, use MESOS_FINAL_PREFIX.

The example below employs both MESOS_FINAL_PREFIX and MESOS_INSTALL_TESTS.

On a build system:

mkdir build && cd build

cmake -DMESOS_FINAL_PREFIX=/opt/mesos -DCMAKE_INSTALL_PREFIX=/home/current_user/mesos -DMESOS_INSTALL_TESTS=ON

cmake --build . --target install

tar -czf mesos.tar.gz mesos -C /home/current_user

On a target system:

sudo tar -xf mesos.tar.gz -C /opt

# Run tests against Mesos installation

sudo /opt/mesos/bin/mesos-tests

# Start Mesos agent

sudo /opt/mesos/bin/mesos-agent --work-dir=/var/lib/mesos ...

Supported options

Examples

See CMake By Example.

Documentation

The CMake documentation is written as a reference module. The most commonly used sections are:

The wiki also has a set of useful variables.

Dependency graph

Like any build system, CMake has a dependency graph. The difference is that targets in CMake's dependency graph are much richer compared to other build systems. CMake targets have the notion of 'interfaces', where build properties are saved as part of the target, and these properties can be inherited transitively within the graph.

For example, say there is a library mylib, and anything which links it must

include its headers, located in mylib/include. When building the library, some

private headers must also be included, but not when linking to it. When

compiling the executable myprogram, mylib's public headers must be included,

but not its private headers. There is no manual step to add mylib/include to

myprogram (and any other program which links to mylib), it is instead

deduced from the public interface property of mylib. This is represented by

the following code:

# A new library with a single source file (headers are found automatically).

add_library(mylib mylib.cpp)

# The folder of private headers, not exposed to consumers of `mylib`.

target_include_directories(mylib PRIVATE mylib/private)

# The folder of public headers, added to the compilation of any consumer.

target_include_directories(mylib PUBLIC mylib/include)

# A new exectuable with a single source file.

add_executable(myprogram main.cpp)

# The creation of the link dependency `myprogram` -> `mylib`.

target_link_libraries(myprogram mylib)

# There is no additional step to add `mylib/include` to `myprogram`.

This same notion applies to practically every build property:

compile definitions via target_compile_definitions,

include directories via target_include_directories,

link libraries via target_link_libraries,

compile options via target_compile_options,

and compile features via target_compile_features.

All of these commands also take an optional argument of

<INTERFACE|PUBLIC|PRIVATE>, which constrains their transitivity in the graph.

That is, a PRIVATE include directory is recorded for the target, but not

shared transitively to anything depending on the target, PUBLIC is used

for both the target and dependencies on it, and INTERFACE is used only

for dependencies.

Notably missing from this list are link directories. CMake explicitly prefers finding and using the absolute paths to libraries, obsoleting link directories.

Common mistakes

Booleans

CMake treats ON, OFF, TRUE, FALSE, 1, and 0 all as true/false

booleans. Furthermore, variables of the form <target>-NOTFOUND are also

treated as false (this is used for finding packages).

In Mesos, we prefer the boolean types TRUE and FALSE.

See if for more info.

Conditionals

For historical reasons, CMake conditionals such as if and elseif

automatically interpolate variable names. It is therefore dangerous to

interpolate them manually, because if ${FOO} evaluates to BAR, and BAR is

another variable name, then if (${FOO}) becomes if (BAR), and BAR is then

evaluated again by the if. Stick to if (FOO) to check the value of ${FOO}.

Do not use if (${FOO}).

Also see the CMake policies CMP0012 and CMP0054.

Definitions

When using add_definitions() (which should be used rarely, as it is for

"global" compile definitions), the flags must be prefixed with -D to be

treated as preprocessor definitions. However, when using

target_compile_definitions() (which should be preferred, as it is

for specific targets), the flags do not need the prefix.

Style

In general, wrap at 80 lines, and use a two-space indent. When wrapping arguments, put the command on a separate line and arguments on subsequent lines:

target_link_libraries(

program PRIVATE

alpha

beta

gamma)

Otherwise keep it together:

target_link_libraries(program PUBLIC library)

Always keep the trailing parenthesis with the last argument.

Use a single space between conditionals and their open parenthesis, e.g.

if (FOO), but not for commands, e.g. add_executable(program).

CAPITALIZE the declaration and use of custom functions and macros (e.g.

EXTERNAL and PATCH_CMD), and do not capitalize the use of CMake built-in

(including modules) functions and macros. CAPITALIZE variables.

CMake anti-patterns

Because CMake handles much more of the grunt work for you than other build systems, there are unfortunately a lot of CMake anti-patterns you should look out for when writing new CMake code. These are some common problems that should be avoided when writing new CMake code:

Superfluous use of add_dependencies

When you've linked library a to library b with target_link_libraries(a b),

the CMake graph is already updated with the dependency information. It is

redundant to use add_dependencies(a b) to (re)specify the dependency. In fact,

this command should rarely be used.

The exceptions to this are:

- Setting a dependency from an imported library to a target added via

ExternalProject_Add. - Setting a dependency on Mesos modules since no explicit linking is done.

- Setting a dependency between executables (e.g. the

mesos-agentrequiring themesos-containerizerexecutable). In general, runtime dependencies need to be setup withadd_dependency, but never link dependencies.

Use of link_libraries or link_directories

Neither of these commands should ever be used. The only appropriate command used

to link libraries is target_link_libraries, which records the information

in the CMake dependency graph. Furthermore, imported third-party libraries

should have correct locations recorded in their respective targets, so the use

of link_directories should never be necessary. The

official documentation states:

Note that this command is rarely necessary. Library locations returned by

find_package()andfind_library()are absolute paths. Pass these absolute library file paths directly to thetarget_link_libraries()command. CMake will ensure the linker finds them.

The difference is that the former sets global (or directory level) side effects, and the latter sets specific target information stored in the graph.

Use of include_directories

This is similar to the above: the target_include_directories should always

be preferred so that the include directory information remains localized to the

appropriate targets.

Adding anything to endif ()

Old versions of CMake expected the style if (FOO) ... endif (FOO), where the

endif contained the same expression as the if command. However, this is

tortuously redundant, so leave the parentheses in endif () empty. This goes

for other endings too, such as endforeach (), endwhile (), endmacro () and

endfunction ().

Specifying header files superfluously

One of the distinct advantages of using CMake for C and C++ projects is that

adding header files to the source list for a target is unnecessary. CMake is

designed to parse the source files (.c, .cpp, etc.) and determine their

required headers automatically. The exception to this is headers generated as

part of the build (such as protobuf or the JNI headers).

Checking CMAKE_BUILD_TYPE

See the "Building debug or release configurations"

example for more information. In short, not all generators respect the variable

CMAKE_BUILD_TYPE at configuration time, and thus it must not be used in CMake

logic. A usable alternative (where supported) is a generator expression such

as $<$<CONFIG:Debug>:DEBUG_MODE>.

Remaining hacks

3RDPARTY_DEPENDENCIES

Until Mesos on Windows is stable, we keep some dependencies in an external

repository, 3rdparty. When

all dependencies are bundled with Mesos, this extra repository will no longer be

necessary. Until then, the CMake variable 3RDPARTY_DEPENDENCIES points by

default to this URL, but it can also point to the on-disk location of a local

clone of the repo. With this option you can avoid pulling from GitHub for every

clean build. Note that this must be an absolute path with forward slashes, e.g.

-D3RDPARTY_DEPENDENCIES=C:/3rdparty, otherwise it will fail on Windows.

EXTERNAL

The CMake function EXTERNAL defines a few variables that make it easy for us

to track the directory structure of a dependency. In particular, if our

library's name is boost, we invoke:

EXTERNAL(boost ${BOOST_VERSION} ${CMAKE_CURRENT_BINARY_DIR})

Which will define the following variables as side-effects in the current scope:

BOOST_TARGET(a target folder name to put dep in e.g.,boost-1.53.0)BOOST_CMAKE_ROOT(where to have CMake put the uncompressed source, e.g.,build/3rdparty/boost-1.53.0)BOOST_ROOT(where the code goes in various stages of build, e.g.,build/.../boost-1.53.0/src, which might contain foldersbuild-1.53.0-build,-lib, and so on, for each build step that dependency has)

The implementation is in 3rdparty/cmake/External.cmake.

This is not to be confused with the CMake module ExternalProject, from which

we use ExternalProject_Add to download, extract, configure, and build our

dependencies.

CMAKE_NOOP

This is a CMake variable we define in 3rdparty/CMakeLists.txt so that we can

cancel steps of ExternalProject. ExternalProject's default behavior is to

attempt to configure, build, and install a project using CMake. So when one of

these steps must be skipped, we use set it to CMAKE_NOOP so that nothing

is run instead.

CMAKE_FORWARD_ARGS

The CMAKE_FORWARD_ARGS variable defined in 3rdparty/CMakeLists.txt is sent

as the CMAKE_ARGS argument to the ExternalProject_Add macro (along with any

per-project arguments), and is used when the external project is configured as a

CMake project. If either the CONFIGURE_COMMAND or BUILD_COMMAND arguments of

ExternalProject_Add are used, then the CMAKE_ARGS argument will be ignored.

This variable ensures that compilation configurations are properly propagated to

third-party dependencies, such as compiler flags.

CMAKE_SSL_FORWARD_ARGS

The CMAKE_SSL_FORWARD_ARGS variable defined in 3rdparty/CMakeLists.txt

is like CMAKE_FORWARD_ARGS, but only used for specific external projects

that find and link against OpenSSL.

LIBRARY_LINKAGE

This variable is a shortcut used in 3rdparty/CMakeLists.txt. It is set to

SHARED when BUILD_SHARED_LIBS is true, and otherwise it is set to STATIC.

The SHARED and STATIC keywords are used to declare how a library should be

built; however, if left out then the type is deduced automatically from

BUILD_SHARED_LIBS.

MAKE_INCLUDE_DIR

This function works around a CMake issue with setting include

directories of imported libraries built with ExternalProject_Add. We have to

call this for each IMPORTED third-party dependency which has set

INTERFACE_INCLUDE_DIRECTORIES, just to make CMake happy. An example is Glog:

MAKE_INCLUDE_DIR(glog)

GET_BYPRODUCTS

This function works around a CMake issue with the Ninja

generator where it does not understand imported libraries, and instead needs

BUILD_BYPRODUCTS explicitly set. This simply allows us to use

ExternalProject_Add and Ninja. For Glog, it looks like this:

GET_BYPRODUCTS(glog)

Also see the CMake policy CMP0058.

PATCH_CMD

The CMake function PATCH_CMD generates a patch command given a patch file.

If the path is not absolute, it's resolved to the current source directory.

It stores the command in the variable name supplied. This is used to easily

patch third-party dependencies. For Glog, it looks like this:

PATCH_CMD(GLOG_PATCH_CMD glog-${GLOG_VERSION}.patch)

ExternalProject_Add(

${GLOG_TARGET}

...

PATCH_COMMAND ${GLOG_PATCH_CMD})

The implementation is in 3rdparty/cmake/PatchCommand.cmake.

Windows patch.exe

While using patch on Linux is straightforward, doing the same on Windows takes

a bit of work. PATH_CMD encapsulates this:

- Checks the cache variable

PATCHEXE_PATHforpatch.exe. - Searches for

patch.exein its default locations. - Copies

patch.exeand a custom manifest to the temporary directory. - Applies the manifest to avoid the UAC prompt.

- Uses the patched

patch.exe.

As such, PATCH_CMD lets us apply patches as we do on Linux, without requiring

an administrative prompt.

Note that on Windows, the patch file must have CRLF line endings. A file with LF

line endings will cause the error: "Assertion failed, hunk, file patch.c, line

343". For this reason, it is required to checkout the Mesos repo with git config core.autocrlf true.

Windows

Mesos 1.0.0 introduced experimental support for Windows.

Building Mesos

System Requirements

-

Install the latest Visual Studio 2017: The "Community" edition is sufficient (and free of charge). During installation, choose the "Desktop development with C++" workload.

-

Install CMake 3.8.0 or later. During installation, choose to "Add CMake to the system PATH for all users".

-

Install GNU patch for Windows.

-

If building from source, install Git.

-

Make sure there are no spaces in your build directory. For example,

C:/Program Files (x86)/mesosis an invalid build directory. -

If developing Mesos, install Python 3 (not Python 2), in order to use our

supportscripts (e.g. to post and apply patches, or lint source code).

Build Instructions

Following are the instructions for Windows 10.

# Clone (or extract) Mesos.

git clone https://gitbox.apache.org/repos/asf/mesos.git

cd mesos

# Configure using CMake for an out-of-tree build.

mkdir build

cd build

cmake .. -G "Visual Studio 15 2017 Win64" -T "host=x64"

# Build Mesos.

# To build just the Mesos agent, add `--target mesos-agent`.

cmake --build .

# The Windows agent exposes new isolators that must be used as with

# the `--isolation` flag. To get started point the agent to a working

# master, using eiher an IP address or zookeeper information.

.\src\mesos-agent.exe --master=<master> --work_dir=<work folder> --launcher_dir=<repository>\build\src

Running Mesos

If you deploy the executables to another machine, you must also install the Microsoft Visual C++ Redistributable for Visual Studio 2017.

Known Limitations

The current implementation is known to have the following limitations:

-

Only the agent should be run on Windows. The Mesos master can be launched, but only for testing as the master does not support high-availability setups on Windows.

-

While Mesos supports NTFS long paths internally, tasks which do not support long paths must be run on agent whose

--work_diris a short path. -

The minimum versions of Windows supported are: Windows 10 Creators Update (AKA version 1703, build number 15063), and Windows Server, version 1709. It is likely that this will increase, due to evolving Windows container support and developer features which ease porting.

-

The ability to create symlinks as a non-admin user requires Developer Mode to be enabled. Otherwise the agent will need to be run under an administrator.

Build Configuration Examples

Building with Ninja

Instead of using MSBuild, it is also possible to build Mesos on

Windows using Ninja, which can result in

significantly faster builds. To use Ninja, you need to download it and

ensure ninja.exe is in your PATH.

- Download the Windows binary.

- Unzip it and place

ninja.exein yourPATH. - Open an "x64 Native Tools Command Prompt for VS 2017" to set your environment.

- In that command prompt, type

powershellto use a better shell. - Similar to above, configure CMake with

cmake .. -G Ninja. - Now you can use

ninjato build the various targets. - You may want to use

ninja -vto make it verbose, as it's otherwise very quiet.

Note that with Ninja it is imperative to open the correct developer command prompt so that the 64-bit build tools are used, as Ninja does not otherwise know how to find them.

Building with Java

This enables more unit tests, but we do not yet officially produce

mesos-master.

When building with Java on Windows, you must add the Maven build tool to

your path. The JAVA_HOME environment variable must also be manually set.

An installation of the Java SDK can be found form Oracle.

As of this writing, Java 9 is not yet supported, but Java 8 has been tested.

The Java build defaults to OFF because it is slow. To build the Java

components on Windows, turn it ON:

mkdir build; cd build

$env:PATH += ";C:\...\apache-maven-3.3.9\bin\"

$env:JAVA_HOME = "C:\Program Files\Java\jdk1.8.0_144"

cmake .. -DENABLE_JAVA=ON -G "Visual Studio 15 2017 Win64" -T "host=x64"

cmake --build . --target mesos-java

Note that the mesos-java library does not have to be manually built; as

libmesos will link it when Java is enabled.

Unfortunately, on Windows the FindJNI CMake module will populate JAVA_JVM_LIBRARY with

the path to the static jvm.lib, but this variable must point to the shared

library, jvm.dll, as it is loaded at runtime. Set it correctly like this:

$env:JAVA_JVM_LIBRARY = "C:\Program Files\Java\jdk1.8.0_144\jre\bin\server\jvm.dll"

The library may still fail to load at runtime with the following error:

"The specified module could not be found."

If this is the case, and the path to jvm.dll is verified to be correct, then

the error message actually indicates that the dependencies of jvm.dll could

not be found. On Windows, the DLL search path includes the environment variable

PATH, so add the bin folder which contains server\jvm.dll to PATH:

$env:PATH += ";C:\Program Files\Java\jdk1.8.0_144\jre\bin"

Building with OpenSSL

When building with OpenSSL on Windows, you must build or install a distribution of OpenSSL for Windows. A commonly chosen distribution is Shining Light Productions' OpenSSL.

As of this writing, OpenSSL 1.1.x is supported.

Use -DENABLE_SSL=ON to build with OpenSSL.

Note that it will link to OpenSSL dynamically, so if the built executables are deployed elsewhere, that machine also needs OpenSSL installed.

Beware that the OpenSSL installation, nor Mesos itself, comes with a certificate bundle, and so it is likely that certificate verification will fail.

ClusterD Agent Options

Required Flags

| Flag | Explanation |

|---|---|

| --master=VALUE |

May be one of:

host:port

zk://host1:port1,host2:port2,.../path

zk://username:password@host1:port1,host2:port2,.../path

file:///path/to/file (where file contains one of the above)

|

| --work_dir=VALUE |

Path of the agent work directory. This is where executor sandboxes

will be placed, as well as the agent's checkpointed state in case of

failover. Note that locations like /tmp which are cleaned

automatically are not suitable for the work directory when running in

production, since long-running agents could lose data when cleanup

occurs. (Example: /var/lib/mesos/agent)

|

Optional Flags

| Flag | Explanation |

|---|---|

| --acls=VALUE |

The value could be a JSON-formatted string of ACLs

or a file path containing the JSON-formatted ACLs used

for authorization. Path could be of the form file:///path/to/file

or /path/to/file.

Note that if the --authorizer flag is provided with a value

other than local, the ACLs contents will be

ignored.

See the ACLs protobuf in acls.proto for the expected format.

Example:

|

| --agent_features=VALUE |

JSON representation of agent features to whitelist. We always require

'MULTI_ROLE', 'HIERARCHICAL_ROLE', 'RESERVATION_REFINEMENT',

'AGENT_OPERATION_FEEDBACK', 'RESOURCE_PROVIDER', 'AGENT_DRAINING', and

'TASK_RESOURCE_LIMITS'.

Example:

|

| --agent_subsystems=VALUE, --slave_subsystems=VALUE |

List of comma-separated cgroup subsystems to run the agent binary

in, e.g., memory,cpuacct. The default is none.

Present functionality is intended for resource monitoring and

no cgroup limits are set, they are inherited from the root mesos

cgroup.

|

| --effective_capabilities=VALUE |

JSON representation of the Linux capabilities that the agent will

grant to a task that will be run in containers launched by the

containerizer (currently only supported by the Mesos Containerizer).

This set overrides the default capabilities for the user but not

the capabilities requested by the framework.

To set capabilities the agent should have the SETPCAP capability.

This flag is effective iff linux/capabilities isolation is enabled.

When linux/capabilities isolation is enabled, the absence of this flag

implies that the operator intends to allow ALL capabilities.

Example:

|

| --bounding_capabilities=VALUE |

JSON representation of the Linux capabilities that the operator

will allow as the maximum level of privilege that a task launched

by the containerizer may acquire (currently only supported by the

Mesos Containerizer).

This flag is effective iff linux/capabilities isolation is enabled.

When linux/capabilities isolation is enabled, the absence of this flag

implies that the operator intends to allow ALL capabilities.

This flag has the same syntax as --effective_capabilities.

|

| --appc_simple_discovery_uri_prefix=VALUE |

URI prefix to be used for simple discovery of appc images,

e.g., http://, https://,

hdfs://.

(default: http://)

|

| --appc_store_dir=VALUE | Directory the appc provisioner will store images in. (default: /tmp/mesos/store/appc) |

| --attributes=VALUE |

Attributes of the agent machine, in the form:

rack:2 or rack:2;u:1

|

| --[no-]authenticate_http_executors |

If true, only authenticated requests for the HTTP executor API are

allowed. If false, unauthenticated requests are also allowed. This

flag is only available when Mesos is built with SSL support.

(default: false)

|

| --authenticatee=VALUE |

Authenticatee implementation to use when authenticating against the

master. Use the default crammd5, or

load an alternate authenticatee module using --modules. (default: crammd5)

|

| --authentication_backoff_factor=VALUE |

The agent will time out its authentication with the master based on

exponential backoff. The timeout will be randomly chosen within the

range [min, min + factor*2^n] where n is the number

of failed attempts. To tune these parameters, set the

--authentication_timeout_[min|max|factor] flags. (default: 1secs)

|

| --authentication_timeout_min=VALUE |

The minimum amount of time the agent waits before retrying authenticating

with the master. See --authentication_backoff_factor for more

details. (default: 5secs)

NOTE that since authentication retry cancels the previous authentication

request, one should consider what is the normal authentication delay when

setting this flag to prevent premature retry.

|

| --authentication_timeout_max=VALUE |

The maximum amount of time the agent waits before retrying authenticating

with the master. See --authentication_backoff_factor for more

details. (default: 1mins)

|

| --authorizer=VALUE |

Authorizer implementation to use when authorizing actions that

require it.

Use the default local, or

load an alternate authorizer module using --modules.

Note that if the --authorizer flag is provided with a value

other than the default local, the ACLs

passed through the --acls flag will be ignored.

|

| --[no]-cgroups_cpu_enable_pids_and_tids_count | Cgroups feature flag to enable counting of processes and threads inside a container. (default: false) |

| --cgroups_destroy_timeout=VALUE | Amount of time allowed to destroy a cgroup hierarchy. If the cgroup hierarchy is not destroyed within the timeout, the corresponding container destroy is considered failed. (default: 1mins) |

| --[no]-cgroups_enable_cfs | Cgroups feature flag to enable hard limits on CPU resources via the CFS bandwidth limiting subfeature. (default: false) |

| --enable_cgroupsv2 | Enable support for cgroupsv2 (Currently only the Docker executor supports CgroupsV2. Set these flag to true, will break the Mesos contrainerizer). |

| --cgroups_hierarchy=VALUE | The path to the cgroups hierarchy root. (default: /sys/fs/cgroup) |

| --[no]-cgroups_limit_swap | Cgroups feature flag to enable memory limits on both memory and swap instead of just memory. (default: false) |

| --cgroups_net_cls_primary_handle | A non-zero, 16-bit handle of the form `0xAAAA`. This will be used as the primary handle for the net_cls cgroup. |

| --cgroups_net_cls_secondary_handles |

A range of the form 0xAAAA,0xBBBB, specifying the valid secondary

handles that can be used with the primary handle. This will take

effect only when the --cgroups_net_cls_primary_handle is set.

|

| --allowed_devices |

JSON object representing the devices that will be additionally

whitelisted by cgroups devices subsystem. Noted that the following

devices always be whitelisted by default:

cgroups/devices is set in

--isolation flag.

Example:

|

| --cgroups_root=VALUE | Name of the root cgroup. (default: mesos) |

| --[no-]check_agent_port_range_only |

When this is true, the network/ports isolator allows tasks to

listen on additional ports provided they fall outside the range

published by the agent's resources. Otherwise tasks are restricted

to only listen on ports for which they have been assigned resources.

(default: false); This flag can't be used in conjunction with

--container_ports_isolated_range.

|

| --container_disk_watch_interval=VALUE |

The interval between disk quota checks for containers. This flag is

used for the disk/du isolator. (default: 15secs)

|

| --container_logger=VALUE |

The name of the container logger to use for logging container

(i.e., executor and task) stdout and stderr. The default

container logger writes to stdout and stderr files

in the sandbox directory.

|

| --container_ports_isolated_range=VALUE |

When this flag is set, network/ports isolator will only enforce

the port isolation for the given range of ports range. This flag can't

be used in conjunction with --check_agent_port_range_only.

Example: [0-35000]

|

| --container_ports_watch_interval=VALUE |

Interval at which the network/ports isolator should check for

containers listening on ports they don't have resources for.

(default: 30secs)

|

| --containerizers=VALUE |

Comma-separated list of containerizer implementations

to compose in order to provide containerization.

Available options are mesos and

docker (on Linux). The order the containerizers

are specified is the order they are tried.

(default: mesos)

|

| --credential=VALUE |

Path to a JSON-formatted file containing the credential

to use to authenticate with the master.

Path could be of the form file:///path/to/file or /path/to/file.

Example:

|

| --default_container_dns=VALUE |

JSON-formatted DNS information for CNI networks (Mesos containerizer)

and CNM networks (Docker containerizer). For CNI networks, this flag

can be used to configure `nameservers`, `domain`, `search` and

`options`, and its priority is lower than the DNS information returned

by a CNI plugin, but higher than the DNS information in agent host's

/etc/resolv.conf. For CNM networks, this flag can be used to configure

`nameservers`, `search` and `options`, it will only be used if there

is no DNS information provided in the ContainerInfo.docker.parameters

message.

See the ContainerDNS message in `flags.proto` for the expected format.

Example:

|

| --default_container_info=VALUE |

JSON-formatted ContainerInfo that will be included into

any ExecutorInfo that does not specify a ContainerInfo.

See the ContainerInfo protobuf in mesos.proto for

the expected format.

Example:

|

| --default_role=VALUE |

Any resources in the --resources flag that

omit a role, as well as any resources that

are not present in --resources but that are

automatically detected, will be assigned to

this role. (default: *)

|

| --default_container_shm_size |

The default size of the /dev/shm for the container which has its own

/dev/shm but does not specify the shm_size field in its

LinuxInfo. The format is [number][unit], number must be

a positive integer and unit can be B (bytes), KB (kilobytes), MB

(megabytes), GB (gigabytes) or TB (terabytes). Note that this flag is

only relevant for the Mesos Containerizer and it will be ignored if

the namespaces/ipc isolator is not enabled.

|

| --[no-]disallow_sharing_agent_ipc_namespace |

If set to true, each top-level container will have its own IPC

namespace and /dev/shm, and if the framework requests to share the agent IPC

namespace and /dev/shm for the top level container, the container launch will

be rejected. If set to false, the top-level containers will share

the IPC namespace and /dev/shm with agent if the framework requests it. This

flag will be ignored if the namespaces/ipc isolator is not enabled.

(default: false)

|

| --[no-]disallow_sharing_agent_pid_namespace |

If set to true, each top-level container will have its own pid

namespace, and if the framework requests to share the agent pid namespace for

the top level container, the container launch will be rejected. If set to

false, the top-level containers will share the pid namespace with

agent if the framework requests it. This flag will be ignored if the

namespaces/pid isolator is not enabled.

(default: false)

|

| --disk_profile_adaptor=VALUE | The name of the disk profile adaptor module that storage resource providers should use for translating a 'disk profile' into inputs consumed by various Container Storage Interface (CSI) plugins. If this flag is not specified, the default behavior for storage resource providers is to only expose resources for pre-existing volumes and not publish RAW volumes. |

| --disk_watch_interval=VALUE | Periodic time interval (e.g., 10secs, 2mins, etc) to check the overall disk usage managed by the agent. This drives the garbage collection of archived information and sandboxes. (default: 1mins) |

| --docker=VALUE | The absolute path to the docker executable for docker containerizer. (default: docker) |

| --docker_config=VALUE |

The default docker config file for agent. Can be provided either as an

absolute path pointing to the agent local docker config file, or as a

JSON-formatted string. The format of the docker config file should be

identical to docker's default one (e.g., either

$HOME/.docker/config.json or $HOME/.dockercfg).

Example JSON ($HOME/.docker/config.json):

|

| --docker_ignore_runtime=VALUE |

Ignore any runtime configuration specified in the Docker image. The

Mesos containerizer will not propagate Docker runtime specifications

such as WORKDIR, ENV and CMD

to the container.

(default: false)

|

| --[no-]docker_kill_orphans | Enable docker containerizer to kill orphaned containers. You should consider setting this to false when you launch multiple agents in the same OS, to avoid one of the DockerContainerizer removing docker tasks launched by other agents. (default: true) |

| --docker_mesos_image=VALUE | The Docker image used to launch this Mesos agent instance. If an image is specified, the docker containerizer assumes the agent is running in a docker container, and launches executors with docker containers in order to recover them when the agent restarts and recovers. |

| --docker_registry=VALUE |

The default url for Mesos containerizer to pull Docker images. It could

either be a Docker registry server url (e.g., https://registry.docker.io),

or a source that Docker image archives (result of docker save) are

stored. The Docker archive source could be specified either as a local

path (e.g., /tmp/docker/images), or as an HDFS URI (*experimental*)

(e.g., hdfs://localhost:8020/archives/). Note that this option won't

change the default registry server for Docker containerizer.

(default: https://registry-1.docker.io)

|

| --docker_remove_delay=VALUE |

The amount of time to wait before removing docker containers (i.e., `docker rm`)

after Mesos regards the container as TERMINATED

(e.g., 3days, 2weeks, etc).

This only applies for the Docker Containerizer. (default: 6hrs)

|

| --docker_socket=VALUE |

Resource used by the agent and the executor to provide CLI access to the

Docker daemon. On Unix, this is typically a path to a socket, such as

/var/run/docker.sock. On Windows this must be a named pipe,

such as //./pipe/docker_engine. NOTE: This must be the path

used by the Docker image used to run the agent. (default:

//./pipe/docker_engine on Windows; /var/run/docker.sock on other

platforms).

|

| --docker_stop_timeout=VALUE | The time docker daemon waits after stopping a container before killing that container. This flag is deprecated; use task's kill policy instead. (default: 0ns) |

| --docker_store_dir=VALUE | Directory the Docker provisioner will store images in (default: /tmp/mesos/store/docker) |

| --docker_volume_checkpoint_dir=VALUE | The root directory where we checkpoint the information about docker volumes that each container uses. (default: /var/run/mesos/isolators/docker/volume) |

| --[no-]docker_volume_chown | Whether to chown the docker volume's mount point non-recursively to the container user. Please notice that this flag is not recommended to turn on if there is any docker volume shared by multiple non-root users. By default, this flag is off. (default: false) |

| --domain_socket_location=VALUE |

Location on the host filesystem of the domain socket used for

communication with executors. Alternatively, this can be set to

'systemd:<identifier>' to use the domain socket

with the given identifier, which is expected to be passed by systemd.

This flag will be ignored unless the Total path length must be less than 108 characters. Will be set to |

| --[no-]enforce_container_disk_quota |

Whether to enable disk quota enforcement for containers. This flag

is used by the disk/du and disk/xfs isolators. (default: false)

|

| --[no-]enforce_container_ports |

Whether to enable network port enforcement for containers. This flag

is used by the network/ports isolator. (default: false)

|

| --executor_environment_variables=VALUE |

JSON object representing the environment variables that should be

passed to the executor, and thus subsequently task(s). By default this

flag is none. Users have to define executor environment explicitly.

Example:

|

| --executor_registration_timeout=VALUE | Amount of time to wait for an executor to register with the agent before considering it hung and shutting it down (e.g., 60secs, 3mins, etc) (default: 1mins) |

| --executor_reregistration_timeout=VALUE | The timeout within which an executor is expected to reregister after the agent has restarted, before the agent considers it gone and shuts it down. Note that currently, the agent will not reregister with the master until this timeout has elapsed (see MESOS-7539). (default: 2secs) |

| --executor_reregistration_retry_interval=VALUE | For PID-based executors, how long the agent waits before retrying the reconnect message sent to the executor during recovery. NOTE: Do not use this unless you understand the following (see MESOS-5332): PID-based executors using Mesos libraries >= 1.1.2 always re-link with the agent upon receiving the reconnect message. This avoids the executor replying on a half-open TCP connection to the old agent (possible if netfilter is dropping packets, see: MESOS-7057). However, PID-based executors using Mesos libraries < 1.1.2 do not re-link and are therefore prone to replying on a half-open connection after the agent restarts. If we only send a single reconnect message, these "old" executors will reply on their half-open connection and receive a RST; without any retries, they will fail to reconnect and be killed by the agent once the executor re-registration timeout elapses. To ensure these "old" executors can reconnect in the presence of netfilter dropping packets, we introduced optional retries of the reconnect message. This results in "old" executors correctly establishing a link when processing the second reconnect message. (default: no retries) |

| --max_completed_executors_per_framework=VALUE | Maximum number of completed executors per framework to store in memory. (default: 150) |

| --jwt_secret_key=VALUE | Path to a file containing the key used when generating JWT secrets. This flag is only available when Mesos is built with SSL support. |

| --executor_shutdown_grace_period=VALUE | Default amount of time to wait for an executor to shut down (e.g. 60secs, 3mins, etc). ExecutorInfo.shutdown_grace_period overrides this default. Note that the executor must not assume that it will always be allotted the full grace period, as the agent may decide to allot a shorter period, and failures / forcible terminations may occur. (default: 5secs) |

| --fetcher_cache_dir=VALUE |

Parent directory for fetcher cache directories

(one subdirectory per agent). (default: /tmp/mesos/fetch)

Directory for the fetcher cache. The agent will clear this directory on startup. It is recommended to set this value to a separate volume for several reasons:

|

| --fetcher_cache_size=VALUE | Size of the fetcher cache in Bytes. (default: 2GB) |

| --fetcher_stall_timeout=VALUE | Amount of time for the fetcher to wait before considering a download being too slow and abort it when the download stalls (i.e., the speed keeps below one byte per second). NOTE: This feature only applies when downloading data from the net and does not apply to HDFS. (default: 1mins) |

| --frameworks_home=VALUE | Directory path prepended to relative executor URIs (default: ) |

| --gc_delay=VALUE | Maximum amount of time to wait before cleaning up executor directories (e.g., 3days, 2weeks, etc). Note that this delay may be shorter depending on the available disk usage. (default: 1weeks) |

| --gc_disk_headroom=VALUE |

Adjust disk headroom used to calculate maximum executor

directory age. Age is calculated by:

gc_delay * max(0.0, (1.0 - gc_disk_headroom - disk usage))

every --disk_watch_interval duration. gc_disk_headroom must

be a value between 0.0 and 1.0 (default: 0.1)

|

| --[no-]gc_non_executor_container_sandboxes |

Determines whether nested container sandboxes created via the

LAUNCH_CONTAINER and LAUNCH_NESTED_CONTAINER APIs will be

automatically garbage collected by the agent upon termination.

The REMOVE_(NESTED_)CONTAINER API is unaffected by this flag

and can still be used. (default: false).

|

| --hadoop_home=VALUE |

Path to find Hadoop installed (for

fetching framework executors from HDFS)

(no default, look for HADOOP_HOME in

environment or find hadoop on PATH)

|

| --host_path_volume_force_creation |

A colon-separated list of directories where descendant directories are

allowed to be created by the volume/host_path isolator,

if the directories do not exist.

|

| --http_credentials=VALUE |

Path to a JSON-formatted file containing credentials. These

credentials are used to authenticate HTTP endpoints on the agent.

Path can be of the form file:///path/to/file or /path/to/file.

Example:

|

| --[no-]http_command_executor |

The underlying executor library to be used for the command executor.

If set to true, the command executor would use the HTTP based

executor library to interact with the Mesos agent. If set to false,

the driver based implementation would be used.

NOTE: This flag is *experimental* and should not be used in

production yet. (default: false)

|

| --http_executor_domain_sockets | If true, the agent will provide a unix domain sockets that the executor can use to connect to the agent, instead of relying on a TCP connection. |

| --http_heartbeat_interval=VALUE |

This flag sets a heartbeat interval (e.g. '5secs', '10mins') for

messages to be sent over persistent connections made against

the agent HTTP API. Currently, this only applies to the

LAUNCH_NESTED_CONTAINER_SESSION and ATTACH_CONTAINER_OUTPUT calls.

(default: 30secs)

|

| --image_providers=VALUE |

Comma-separated list of supported image providers,

e.g., APPC,DOCKER.

|

| --image_provisioner_backend=VALUE |

Strategy for provisioning container rootfs from images, e.g., aufs,

bind, copy, overlay.

|

| --image_gc_config=VALUE |

JSON-formatted configuration for automatic container image garbage

collection. This is an optional flag. If it is not set, it means

the automatic container image gc is not enabled. Users have to

trigger image gc manually via the operator API. If it is set, the

auto image gc is enabled. This image gc config can be provided either

as a path pointing to a local file, or as a JSON-formatted string.

Please note that the image garbage collection only work with Mesos

Containerizer for now.

See the ImageGcConfig message in `flags.proto` for the expected

format.

In the following example, image garbage collection is configured to

sample disk usage every hour, and will attempt to maintain at least

10% of free space on the container image filesystem:

|

| --ip6=VALUE |

IPv6 address to listen on. This cannot be used in conjunction

with --ip6_discovery_command.

NOTE: Currently Mesos doesn't listen on IPv6 sockets and hence

this IPv6 address is only used to advertise IPv6 addresses for

containers running on the host network.

|

| --ip6_discovery_command=VALUE | Optional IPv6 discovery binary: if set, it is expected to emit the IPv6 address on which Mesos will try to bind when IPv6 socket support is enabled in Mesos. NOTE: Currently Mesos doesn't listen on IPv6 sockets and hence this IPv6 address is only used to advertise IPv6 addresses for containers running on the host network. |

| --isolation=VALUE |

Isolation mechanisms to use, e.g., posix/cpu,posix/mem (or

windows/cpu,windows/mem if you are on Windows), or

cgroups/cpu,cgroups/mem, or network/port_mapping

(configure with flag: --with-network-isolator to enable),

or gpu/nvidia for nvidia specific gpu isolation, or load an alternate

isolator module using the --modules flag. If cgroups/all

is specified, any other cgroups related isolation options (e.g.,

cgroups/cpu) will be ignored, and all the local enabled cgroups

subsystems on the agent host will be automatically loaded by the cgroups isolator.

Note that this flag is only relevant for the Mesos Containerizer. (default: